Every day, state, county, and municipal governments improve citizen-facing services through the power of data science. Although it might sound complicated, “data science” simply means leveraging technology to find patterns in data. Identifying these patterns helps organizations better understand their business, communities, or the environment in which they operate.

This short post is going to talk about an interesting statistical distribution, the Gumbel distribution, and how to use TensorFlow and SciPy to fit that distribution on historical weather data provided by the government.

It was really cold yesterday in Chicago. My weather app said -22 degrees Fahrenheit, and there was a disturbingly thick layer of frost on some of my windows.

For those of you who are newcomers to Chicago — or are observing this frosty landscape from afar — you may be wondering: how often does it get this cold here?

Getting some weather data

Thankfully, the good people at the National Oceanic and Atmospheric Administration maintain a database of daily weather records going back many years. A lot of weather stations only have data from the past few decades, but the “Select Date Range” input on their climate data search page starts at 1763, and I was able to find a station at Midway airport with data going back to 1928. I filled out a little web form asking for minimum and maximum daily temperatures as well as daily snowfall, and then I got an email shortly after with a link to a nicely formatted CSV file with the data I was looking for. (As a side note, I’m pretty sure that this service was offline because of the partial government shutdown a few weeks ago.)

The Apocalyptic Gumbel distribution

With the data I found, I could have simply looked at how many times the temperature has gotten below -20°F, but being a data scientist, I wondered how to model data like this, particularly in order to answer the following question:

What’s the chance that a particular year will have a minimum temperature at least as extreme as -20°F?

There are lots of other interesting questions one might ask about the weather, but I’ll focus on that one and a couple similar to it.

Earlier in the winter, when the temperature was a balmy 10°F, I’d been reading about the Gumbel Softmax trick for approximating samples from categorical distributions in deep learning models, which had led me to learn about the fascinating Gumbel distribution, which is used by actual weather scientists (i.e., not me) to model things like maximum water levels, extreme earthquake magnitudes, etc. It is a distribution of the maximum value of a collection of samples. (I won’t get into the underlying assumptions about where the samples come from, but I think applying it to annual minimum temperature data isn’t a stretch).

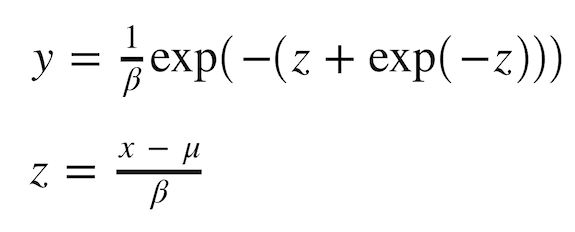

The form of the Gumbel distribution is quite simple. Here’s the probability density function:

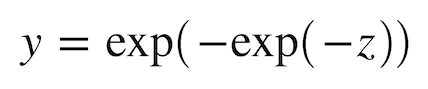

And the cumulative distribution function is as follows:

TensorFlow isn’t (just) a deep learning tool

I’m sure there are various packages for estimating Gumbel distributions, but I decided to write a simple maximum likelihood estimation procedure in TensorFlow and SciPy. Rebuilding basic statistical functions from scratch doesn’t make sense generally. However, in this case, it illustrates how TensorFlow is useful not just for deep learning but also for math more generally.

In particular, optimization algorithms for statistical models (e.g., L-BFGS) often require an objective function and the gradient of that with respect to model parameters. With TensorFlow, one only has to write the objective function and then call tf.gradients, which makes life easier. One can also use optimizers in TensorFlow to estimate models, but one doesn’t need to. For example, the objective function and gradient from TensorFlow can be passed into SciPy’s optimization package, either directly or through this user-contributed interface in the TensorFlow package.

Some results

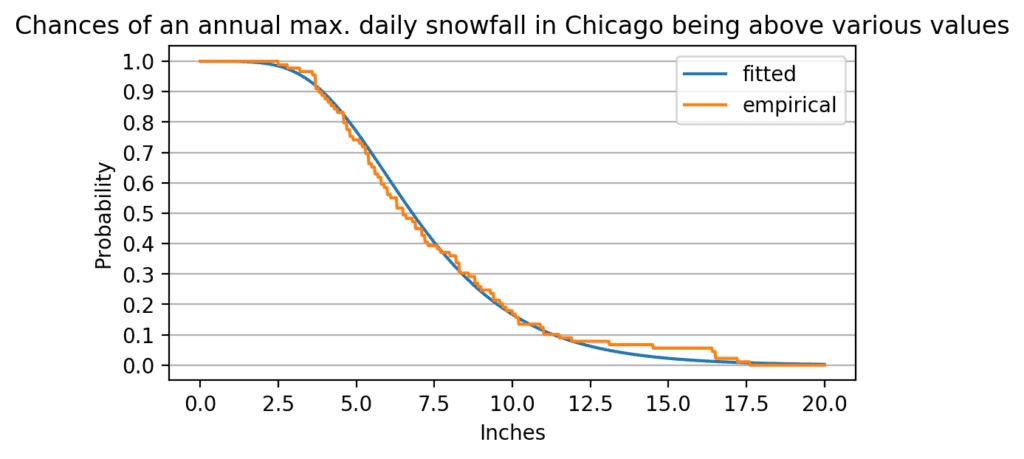

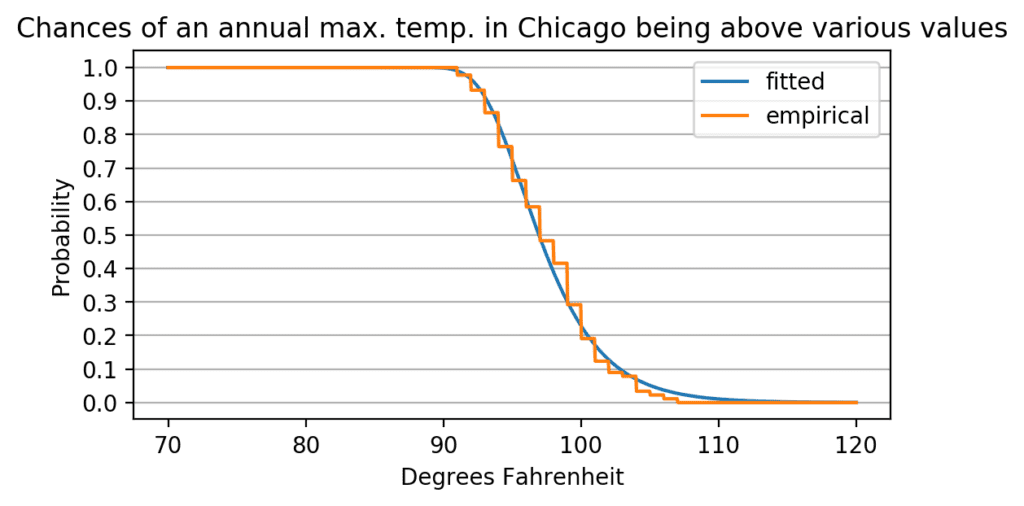

I decided to fit Gumbel distributions for three things: minimum temperatures in a year (in °F), maximum temperatures in a year (so I don’t have to write another blog post in the summer), and maximum daily snowfall values in a year (in inches).

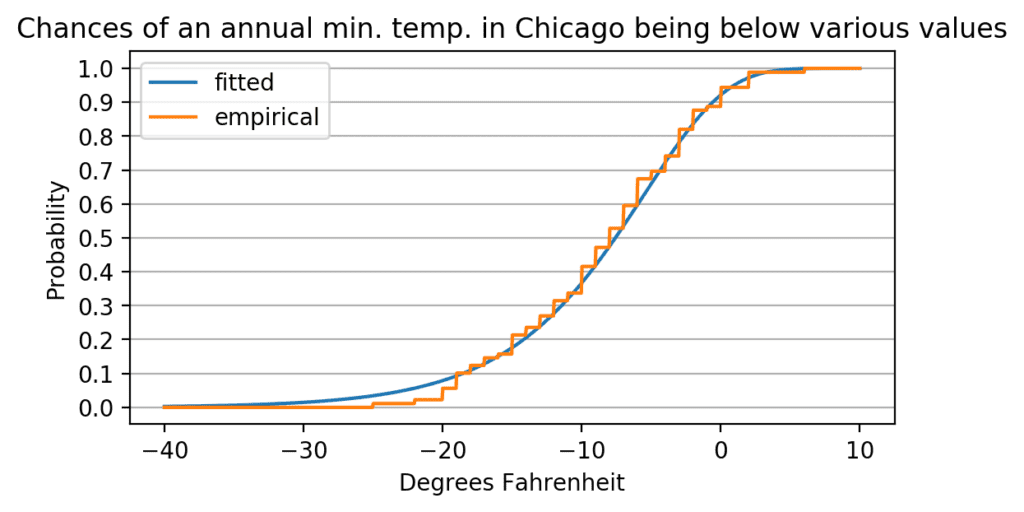

I’ve plotted cumulative distribution functions here because those seem the most interesting, and I’ve included the empirical values along with the fitted model predictions for comparison. The minimum temperature model seems to overestimate the chance of extremely cold temperatures a bit, but the fits seems mostly good.

It looks like there’s about a 5% chance of temperatures getting at or below -22°F in a year: the Gumbel model says 5.6%, and the raw data says 2.2% (2 out of 89). Also, if you can’t handle temperatures at or below -10°F, you might not want to live in northern Illinois because there’s about a 36.7% chance of temperatures getting that low during any particular year, according to the model (or 37 out of 89 = 41.6% for the raw data).

Chicago doesn’t get as many big snowfalls as some places (e.g., the Northeast), but there’s still a 7.6% chance of at least one day with a foot or more of snowfall according to the snowfall model.

Chicago summers are pretty nice, though, and don’t have a big chance of extreme temperatures. Looking at the maximum temperature model, we see that there’s only 22.9% chance of a day over 100°F in a year. I’ll take that chance.

Code: https://gist.githubusercontent.com/mheilman/24012cbf667dc07d2b4a8e9df30c0ba6/raw/40053a9dc3905bdc2f075c849f380a63ab397449/gumbel.ipynb